SPARK PYTHON RUNNER LOGS INSTALL

This will be removed 17 # in future versions to make it completely portable 18 RUN pip3 install awscliġ9 20 WORKDIR /opt 21 RUN curl > install.shĢ2 RUN bash /opt/install.sh -install-dir =/optĢ3 ENV PATH $PATH:/opt/google-cloud-sdk/binĢ4 WORKDIR /root 25 26 # Virtual environment 27 ENV VENV /opt/venvĢ8 RUN python3 -m venv $ streamFilter=typeLogStreamPrefix" More configuration #

SPARK PYTHON RUNNER LOGS UPDATE

You can use Flyte cluster resource manager to manage creating the spark service account per namespace.įor this, you need to add the cluster resource templates as shown here (refer to the spark.yaml files).ġ FROM apache/spark-py:3.3.1 2 LABEL ġ0 ARG spark_uid = 1001 11 12 # Install Python3 and other basics 13 USER 0 14 RUN apt-get update & apt-get install -y python3 python3-venv make build-essential libssl-dev python3-pip curl wgetġ5 16 # Install AWS CLI to run on AWS (for GCS install GSutil). If you use IAM for Service accounts or GCP Workload identity, you need to update the service account to include this. Spark needs a special service account (with associated role and role bindings) to create executor pods. Plugins : spark : spark-config-default : # We override the default credentials chain provider for Hadoop so that # it can use the serviceAccount based IAM role or ec2 metadata based. You can optionally configure the plugin as per the backend config structure and an example config is defined You can follow the steps mentioned in the K8s Plugins section. This is a backend plugin which has to be enabled in your deployment. Step 1: Deploy Spark Plugin in the Flyte Backend #įlyte Spark uses the Spark On K8s Operator and a custom built Flyte Spark Plugin. No interactive Spark capabilities are available with Flyte K8s Spark, which is more suited for running adhoc and scheduled jobs. Short running, bursty jobs are not a great fit because of the container overhead. The K8s Spark plugin brings all the benefits of containerization to Spark without needing to manage special Spark clusters.Įxtremely easy to get started get complete isolation between workloads.Įvery job runs in isolation and has its own virtual cluster - no more nightmarish dependency management! Flyte makes it easy to version and manage dependencies using containers. In this scenario, the cost of pod bring-up outweighs the cost of execution. A job can be considered short if the runtime is less than 2-3 minutes. This is not recommended for extremely short-running jobs, and it might be better to use a pre-spawned cluster. We recommend using multi-cluster mode: Multi-Cluster mode, and enabling Resource Quotas for large and extremely frequent Spark Jobs. This still needs a large capacity on Kubernetes and careful configuration. This plugin has been tested at scale, and more than 100k Spark Jobs run through Flyte at Lyft. Hands-on tutorial for writing PySpark tasks.

Spark cluster will be automatically configured using the decorated SparkConf.

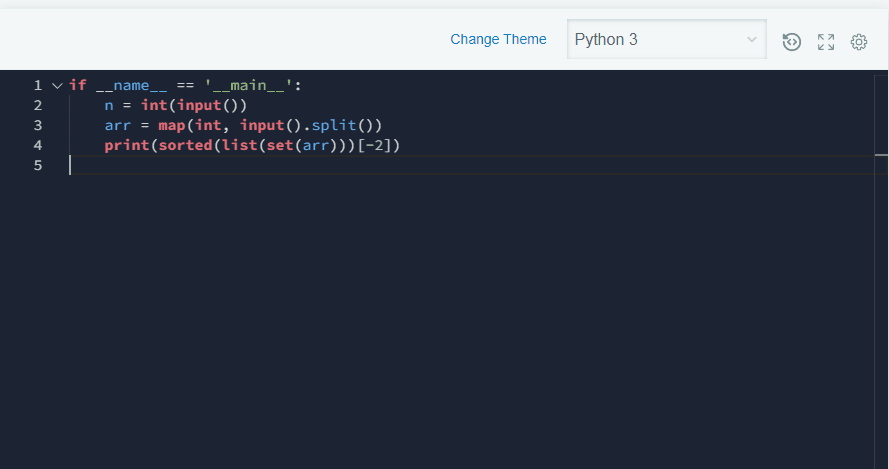

SPARK PYTHON RUNNER LOGS CODE

In Flyte, the cost is amortized because pods are faster to create than a machine, but the penalty of downloading Docker images may affect the performance.Īlso, remember that starting a pod is not as fast as running a process.įlytekit makes it possible to write PySpark code natively as a task and the

These clusters are better for production workloads but have an extra cost of setup and teardown. It is like running a transient spark cluster - a type of cluster spun up for a specific Spark job and torn down after completion. It leverages the open-sourced Spark On K8s OperatorĪnd can be enabled without signing up for any service. Tags: Spark, Integration, DistributedComputing, Data, Advancedįlyte can execute Spark jobs natively on a Kubernetes Cluster, which manages a virtual cluster’s lifecycle, spin-up, and tear down. Toggle table of contents sidebar Kubernetes Spark Jobs #

0 kommentar(er)

0 kommentar(er)